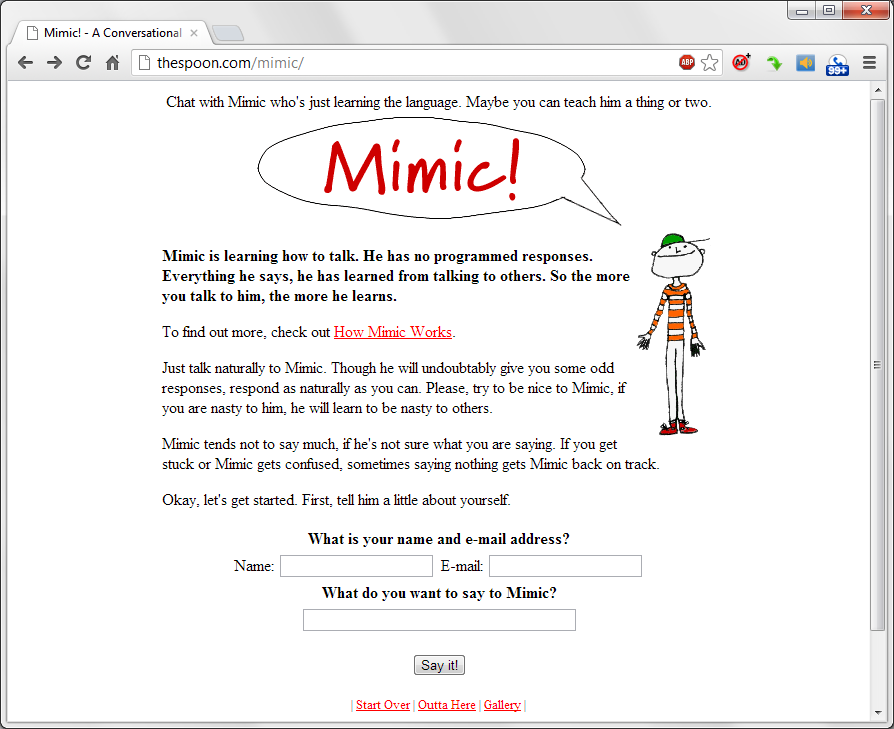

I designed the Mimic chatterbot, a program designed to simulate an intelligent conversation with a human.

Unlike other conversational robots, Mimic has no built-in knowledge of the language. He learns to speak solely by talking to others, and remembering a limited amount of context around each transaction.

Mimic works by creating a pattern based on a tokenized version of a limited number of the most recent things said in the conversation.

When you say something to him, Mimic figures out what to say by finding the closest match from the database for the pattern consisting of what you said, what he said, and a small bit of context. A typical pattern might look like:

how you qq|doing great|what up qq|Well not much, just talking to you.If this were the pattern matched, Mimic would return “Well not much, just talking to you.”

I had been toying abstractly with the idea of a similar bot for years, but the design for Mimic came to me in a dream in 1997. I programmed the basic structure within 24 hours starting the next morning.

Roughly the idea behind mimic is adapted from a concept called Associative Pattern Retrieval by Robert A. Levinson at the University of California, Santa Cruz. (more: lite & heavy)

Weaknesses of the algorithm are a lack of pruning, lack of pattern promotion/demotion, a need for better pattern-making, and a need to use a real database (not just a crazy inefficient flat file).

Mimic competed in the Chatterbot Challenge in 2006, and ranked somewhere in the high middle of 65 entries.