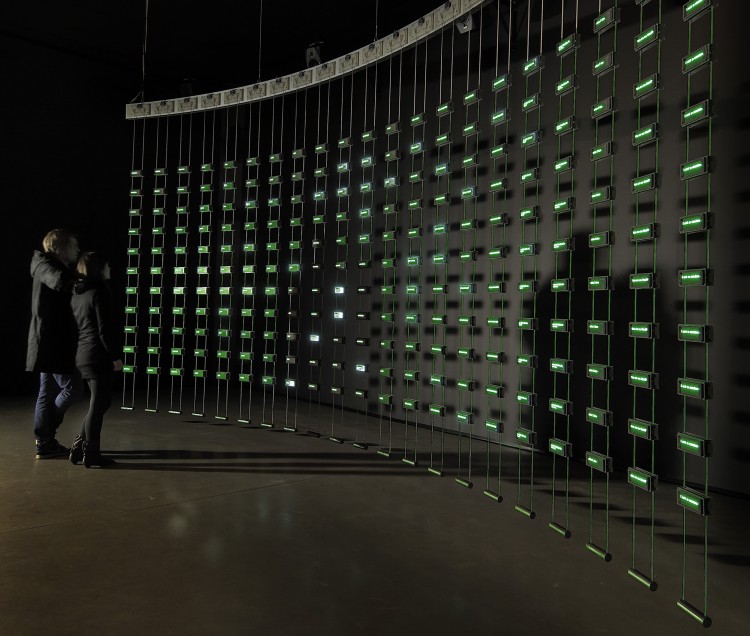

In December 2013, Mark Hansen talked to me about his collaboration with Ben Rubin, the seminal New Media art piece Listening Post. I was researching a paper for submission to a New Media journal and he was gracious enough to answer my technical questions. He also revealed some startling news that changes the entire context of this early 2000s New Media work.

Wes Modes: I wrote you several weeks ago about a manuscript I’m working on regarding your early collaborations with Ben Rubin, specifically Ear to the Ground and Listening Post.

I would like to hear from you and Ben and possibly get some specific questions answered. Do you have a few moments to entertain a few questions?

Mark Hansen: Sure I’d be happy to talk.

One of my reviewers said, “When I saw it, Listening Post seemed to repeat itself — ie not truly dynamically generated. I wonder, is this really true? Or is the tech a cheat? I’m asking, not accusing… I love the piece (everyone does, and I’m no different) but the complex inner workings described here, it seems to me, would have produced more variation in the outcome. What I saw seemed quite similar, and repetitive.”

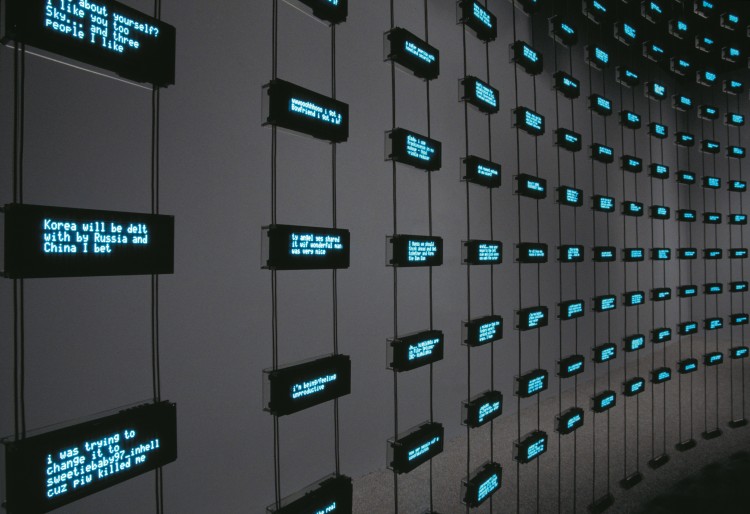

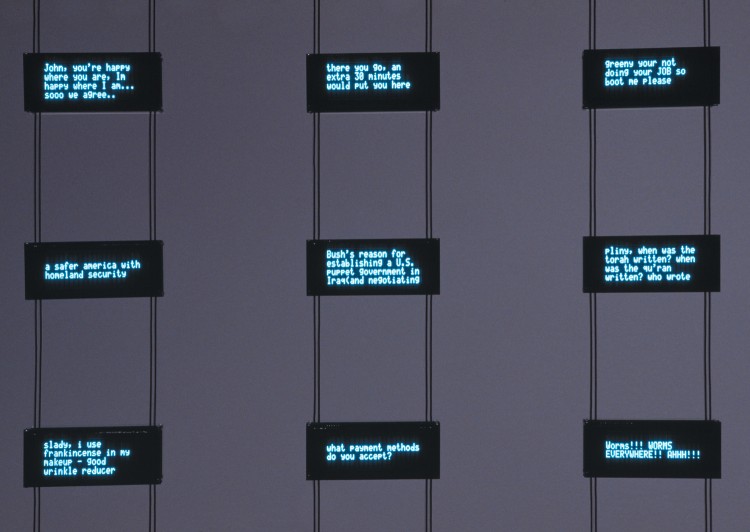

The piece repeats its scene structure but the text should be different… The piece runs through a series of scenes, the whole cycle lasting about 22 minutes. The scene structures will repeat themselves, but the data are meant to be different each time. The scenes are really just simple language processing algorithms that organize and present the data — but these algorithms involved a great deal of craft and experimentation. These overarching structures don’t change and this might be what your reviewer meant.

With many people I talked to, there was a general disbelief that the piece is really using live data all the time. Perhaps they suspect that there is a kind of “demo mode” that it slips into if the internet is having a quiet moment.

There isn’t a demo mode but if the network connection has a problem, it will recycle data. I don’t believe we’ve had a problem on our tours. But even when it recycles, the repetition would be seen over days not hours. We have a large cache of data in case there are network problems.

I’ve heard your and Ben’s thoughts on the lifespan of Listening Post. Have you ever been tempted to update it to mine Twitter or Facebook for data?

We’ve transitioned to twitter text with the most recent exhibition at Montpellier. It was actually quite effective.

When we first made listening post the data collection required some effort. These days with twitter and social media people often refer to a global conversation… All this seems less magical now.

Wow, that is kinda big news, the transition to Twitter text. Exclusively, or as well as forum and IRC sources? I’d love to know more about that. Hannah Redler went on at length about it in her curatorial notes when it came to the Science Museum. So when it comes back to San Jose from Montpellier, will it be using Twitter?

We will move the science museum to tweets if they are ok with it. It does depend on the host being open to it i think… Curiously, tweets fit beautifully on the screens…

Does it use Twitter exclusively, or in combination with IRC and forums?

in our experiment, it was Twitter exclusively. our early writing on the piece talked about a “global conversation” and now that seems to be happening more broadly on Twitter, not IRC.

With the transition to Twitter, how does Listening Post summarize the topic of the “room” when there is no clearly-defined room anymore?

We don’t need that, and haven’t used the room topic in a while. The room topic was part of that audio piece we did before LP took shape and had screens added to it.

What changes were required in your statistical analysis to move to Twitter?

The filtering changed… Our TTS sounds awful on certain patterns of symbols, whether they be in IRC or Tweets. The actual patterns of symbols changed when we moved to Twitter but the overall data pipeline remained the same.

In your first ICAD paper, you mention that Listening Post gathers statistics about the characteristics of the discussion (percentage visitors contributing, how often contribute, etc). Is this metadata about the content used in the output of Listening Post in any way?

Well, all of that was open to us. Yes. We never really incorporated it into a scene except to display a list of disassociated user names… a bit like credits. That first paper was written when LP was still in progress.

Early papers on Listening Post describe quite complex statistical processing, for instance the generalized sequence mining algorithm. Has this processing simplified over time?

The original papers on Listening Post are a bit old now. The scenes do make use of specific kinds of filters as well as text clustering. And these are all at the level of words-as-symbols rather than looking at semantics. But you are right, that piece of processing isn’t needed because we are not doing that much per-room summarization… Our initial experiments with the audio-only version of Listening Post and the early grid even had some more advanced processing. As the piece matured, some things simplified.

In an early paper you describe Listening Post as having a piano score, while later it is referred to as having sampled sounds. My observation was a more ambient score during only certain scenes. Did this change over time, or only your description of it?

We developed a number of experiments on our way to Listening Post. It started as a sound piece and there was a synthesized piano score together with a computer voice. Over time, and with new versions of the work, the screens appeared, first in a small grid and then in a larger one.

So is the piano score still present in some of the scenes? Is there other musicality?

There are tones that assemble into something musical based on the action in one scene. Sound animates most scenes, either from the screens or from our audio setup. And yes, in once scene there is a piano score. It is more of a loop really. You can find video of it online.

I’ve only seen many hours of video of the piece, but though a version lives close to me in San Jose, it is out touring in Montpelier.

In the 2002 ICAD paper the display controller was described as a PC running WinNT, while in the 2007 Processing interviews the screen controllers were described as seven servers running Perl. Was this an evolution that came with the expansion of the visual displays?

Two different systems here. The Perl reference was about data collection. The piece has a collection side and a display side. On the display side we use a Linux box to talk to screens and a Windows box for the sound. You can think of Listening Post as a kind of instrument, with systems that render the output of our simple language processing algorithms in sound and on the screens.

Are you willing to share which commercial Text-To-Speech (TTS) system you are using?

It’s not commercial. It was developed by the Bell Laboratories TTS group.

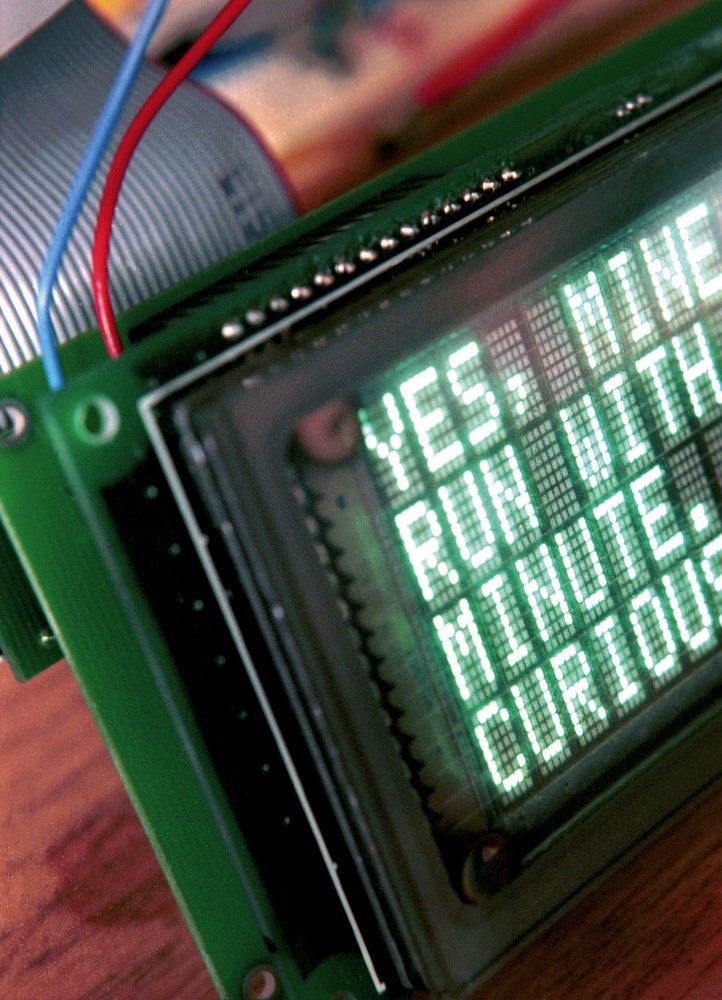

I presume that the Vacuum Florescent Displays (VFDs) have their own limited microprocessor. Are you communicating with the VFDs via RS232 serial?

The strands are in groups of 3, each it’s own circuit. We have a ethernet-to-serial box in the rack that exposes the circuits to our display code. In the end, each screen is individually addressable. How much detail do you need?

The technical details are interesting to me personally as an artist and an engineer. I’d love to know how the VFDs are individually addressable. It helps to know that they are serial because I can begin to conceptualize how a few computers can handle several hundred output screens.

It only takes one computer to drive the grid of screens. We have an ethernet-to-serial box that exposes the separate messages to the different circuits (each three strands). The display units have a switch on the back that lets us set the address so that each is given an address from 0-32 in the circuit (11 rows x 3 strands = 33 devices)

I’ll send a draft of the paper I’m working on when I’ve incorporated your comments. Thanks again.

References and Further Reading

- Baker, Kenneth. “‘Listening Post’ brings the Internet into view.” SFGate, 2007. Web. Retrieved 14 Nov. 2013.

- Hansen, Mark, and Ben Rubin. “Babble Online: Applying Statistics And Design To Sonify The Internet.” Proceedings of the 2001 International Conference on Auditory Display. 2001.

- Hansen, Mark, and Ben Rubin. “Listening post: Giving voice to online communication.” Proceedings of the 2002 International Conference on Auditory Display. 2002.

- Hansen, Mark, and Ben Rubin. “Listening post.” Whitney Museum of American Art. 2003.

- Hansen, Mark, and Ben Rubin. “The audiences would be the artists and their life would be the arts.” Multimedia, IEEE 7.2 (2000): 6-9.

- Hansen, Mark. “Data-Driven Aesthetics.” New York Times, 20 June 2013. Print. Retrieved 11 Dec. 2013. <http://bits.blogs.nytimes.com/2013/06/19/data-driven-aesthetics>.

- Mirapaul, Matthew. “Making an opera from Cyberspace’s Tower of Babel.” New York Times. 10 Dec. 2001. Print. Retrieved 11 Dec. 2013. <www.nytimes.com/2001/12/10/arts/music/10ARTS.html>.

- Reas, Casey, and Ben Fry. Processing: a programming handbook for visual designers and artists. Vol. 6812. Mit Press, 2007.

- Redler, Hannah. “Listening Post Curatorial Statement.” Science Museum Arts Project. The Science Museum. 2007. Web. Retrieved 11 Dec. 2013. <http://www.sciencemuseum.org.uk/smap/collection_index/mark_hansen_ben_rubin_listening_post.aspx>.

- Rubin, Ben. “Listening Post.” EAR Studio, 2010. Web. Retrieved 11 Dec. 2013. <http://earstudio.com/2010/09/29/listening-post/>.

- Smith, Roberta. “Mark Hansen and Ben Rubin “Listening Post”.” New York Times, 21 Feb. 2003. Print. Retrieved 14 Nov. 2013. <http://www.nytimes.com/2003/02/21/arts/design/21GALL.html>.

- Takeaway Festival “Listening Post: Interview with Ben Rubin and Mark Hansen” [Video]. 13 May 2008. Retrieved 11 Dec. 2013. <http://www.youtube.com/watch?v=eKHouIXleEE>.

Citing This Interview

Hansen, Mark. “An Interview with Mark Hansen”. Inspiration Comes in the Night. Wes Modes, 2014. Web. Retrieved 18 Jan. 2014. <https://modes.io/mark-hansen-interview/>